Sqoop("SQL-to-Hadoop").簡單來說它是用來協助開發人員將資料庫的資料轉換到HDFS的一項工具,不過要到Hadoop 0.21.0版本釋出才會正式的納進來,但如果現在就要使用的話可以直接Patch HADOOP-5815來使用。

Sqoop主要是透過JDBC來和各個資料庫連結,並自動產生O/R Mapping的java檔,最後透過MapReduce將資料庫的資料轉換到HDFS之中,下述是筆者的範例測試:

Sqoop example for MySQL

下載JDBC Driver for MySQL (Connector/J 5.1),並設定好它的CLASSPATH。

資料庫: test

資料表: student

create table student(

id int not null primary key,

name varchar(20),

score tinyint

);

預設三筆資料:

mysql> select * from student; +----+------+-------+ | id | name | score | +----+------+-------+ | 1 | mary | 98 | | 2 | jack | 94 | | 3 | john | 40 | +----+------+-------+ 3 rows in set (0.00 sec)

這裡的目標是指定將student table轉出到HDFS之中:

hadoop jar sqoop.jar org.apache.hadoop.sqoop.Sqoop --connect jdbc:mysql://localhost/test --table student

執行過程:

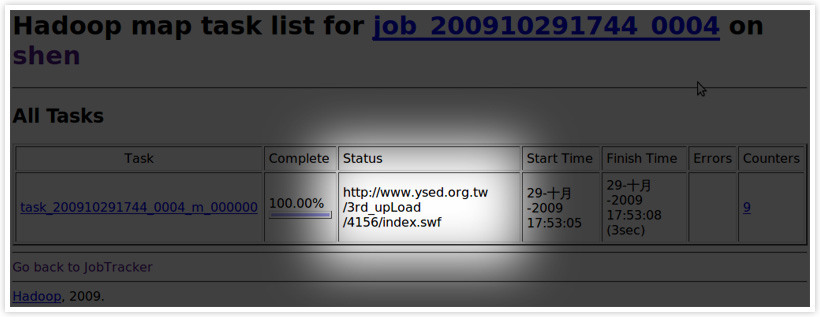

09/10/30 06:48:47 INFO sqoop.Sqoop: Beginning code generation 09/10/30 06:48:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM student AS t WHERE 1 = 1 09/10/30 06:48:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM student AS t WHERE 1 = 1 09/10/30 06:48:48 INFO orm.CompilationManager: HADOOP_HOME is /home/hdp/hadoop-0.20.1/bin/.. 09/10/30 06:48:48 INFO orm.CompilationManager: Found hadoop core jar at: /home/hdp/hadoop-0.20.1/bin/../hadoop-0.20.1-core.jar 09/10/30 06:48:48 INFO orm.CompilationManager: Invoking javac with args: -sourcepath ./ -d /tmp/sqoop/compile/ -classpath /home/hdp/hadoop-0.20.1/bin/../conf:/usr/lib/jvm/java-6-sun-1.6.0.10/lib/tools.jar:/home/hdp/hadoop-0.20.1/bin/..:/home/hdp/hadoop-0.20.1/bin/../hadoop-0.20.1-core.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-cli-1.2.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-codec-1.3.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-el-1.0.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-httpclient-3.0.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-logging-1.0.4.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-logging-api-1.0.4.jar:/home/hdp/hadoop-0.20.1/bin/../lib/commons-net-1.4.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/core-3.1.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/hsqldb-1.8.0.10.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jasper-compiler-5.5.12.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jasper-runtime-5.5.12.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jets3t-0.6.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jetty-6.1.14.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jetty-util-6.1.14.jar:/home/hdp/hadoop-0.20.1/bin/../lib/junit-3.8.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/kfs-0.2.2.jar:/home/hdp/hadoop-0.20.1/bin/../lib/log4j-1.2.15.jar:/home/hdp/hadoop-0.20.1/bin/../lib/oro-2.0.8.jar:/home/hdp/hadoop-0.20.1/bin/../lib/servlet-api-2.5-6.1.14.jar:/home/hdp/hadoop-0.20.1/bin/../lib/slf4j-api-1.4.3.jar:/home/hdp/hadoop-0.20.1/bin/../lib/slf4j-log4j12-1.4.3.jar:/home/hdp/hadoop-0.20.1/bin/../lib/sqoop.jar:/home/hdp/hadoop-0.20.1/bin/../lib/xmlenc-0.52.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jsp-2.1/jsp-2.1.jar:/home/hdp/hadoop-0.20.1/bin/../lib/jsp-2.1/jsp-api-2.1.jar:.:/home/hdp/hadoop-0.20.1/bin/../mysql-jdbc.jar:/home/hdp/hadoop-0.20.1/bin/../hadoop-0.20.1-core.jar ./student.java 09/10/30 06:48:49 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop/compile/student.jar 09/10/30 06:48:49 INFO mapred.ImportJob: Beginning data import of student 09/10/30 06:48:50 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM student AS t WHERE 1 = 1 09/10/30 06:48:50 INFO mapred.JobClient: Running job: job_200910300539_0002 09/10/30 06:48:51 INFO mapred.JobClient: map 0% reduce 0% 09/10/30 06:49:04 INFO mapred.JobClient: map 50% reduce 0% 09/10/30 06:49:07 INFO mapred.JobClient: map 100% reduce 0% 09/10/30 06:49:09 INFO mapred.JobClient: Job complete: job_200910300539_0002 09/10/30 06:49:09 INFO mapred.JobClient: Counters: 6 09/10/30 06:49:09 INFO mapred.JobClient: Job Counters 09/10/30 06:49:09 INFO mapred.JobClient: Launched map tasks=2 09/10/30 06:49:09 INFO mapred.JobClient: FileSystemCounters 09/10/30 06:49:09 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=30 09/10/30 06:49:09 INFO mapred.JobClient: Map-Reduce Framework 09/10/30 06:49:09 INFO mapred.JobClient: Map input records=3 09/10/30 06:49:09 INFO mapred.JobClient: Spilled Records=0 09/10/30 06:49:09 INFO mapred.JobClient: Map input bytes=3 09/10/30 06:49:09 INFO mapred.JobClient: Map output records=3

在執行Sqoop上述指令後,它會自動產生一個「student.java」檔(ORM),並打包在「/tmp/sqoop/compile/student.jar」,而這個檔案除了包含「student.class」,同時也包含「sqoop.jar」在它的lib資料夾內,執行成功之後就可以在HDFS之中看到轉換後的資料。

從上述的執行過程中,不難發現其實Sqoop只是透過Map Phase來進行轉換。

相關資源